Went Well

The presentation tonight went very well, I think. George gave a nice introduction for me, and everyone was very patient through the talk, and in the end I think it worked well. And loads of people were very excited in the confluence of Kevin’s Vote Links and my Seeded Search ideas. Then in the Q-and-A, people asked about librarians, searches, Lessig, and the Disseminary.

I’ll put my version of the talk in the extended comments section below. There’s some risk that the papers from this conference will be published in print eventually, so if you see a mistake or an imprecise argument, please be kind enough to protect me from looking utterly foolish, and let me know what to change.

Cards, Links and Research

—

Teaching Technological Learners

Back at the first iteration of this conference, I proposed a way of thinking along with technology that took its cues from what was then the exemplary killer app of the internet: Napster. This evening I propose a technology lesson from another web application, the über-search engine Google, as a way of evaluating pedagogical practice in a technologically-saturated learning environment.

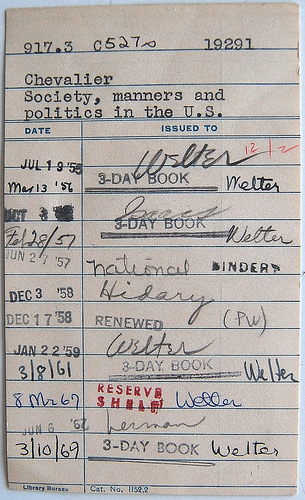

A long time ago, back when I was in seminary — before I even had a computer to type on — I discovered some fascinating secrets of academic research. These were the days when every book was checked in or out of the Yale Divinity School library by signing and filing a 3 × 5 card rather like this one.

My discovery was that I could read the circulation cards in the books I saw, to see whether my professors had taken these books out. If I was uncertain of the value of the book at which I was looking, I could see whether (or how many) any of my professors had taken the book out. If all three of the active professors in my field had bothered to take the book out, it suggested that the book really was worth my attention. If one had renewed it for several terms, it probably played a role in his on-going research. Not only that, I deduced that books that were located in the Union Seminary Classification section, down in the third level of basement under the Institute of Sacred Music must not have circulated for the many years it had been since YDS Library switched to Library of Congress classification — obviously, these were not hot books on my subject. These and various other tricks helped me learn to recognize the academic sources that my teachers would also recognize, and to distinguish them from vernacular treatments.

I introduce this exercise in nostalgia not simply to impress upon my younger colleagues that once upon a time we used such primitive devices to regulate library collections, but more importantly to point to the tremendous value of metadata — contextual and para-textual information — in research. I was making decisions about the scholarly weight of particular viewpoints based not on the intrinsic quality of the arguments — not solely so, anyway — but on extrinsic markers that suggested that other people thought the book important (or unimportant). The classic instance of the value of metadata comes from The Social Life of Information:

I was working in an archive of a 250-year-old business, reading correspondence from about the time of the American Revolution. Incoming letters were stored inwooden boxes about the size of standard Styrofoam picnic cooler, each containing a fair portion of dust as old as the letters. As opening a letter triggered a brief asthmatic attack, I wore a scarf tied over my mouth an nose. Despite my bandit?s attire, my nose ran, my eyes wept, and I coughed, wheezed, and snorted. I longed for a digital system that would hold the information from the letters and leave paper and dust behind.

One afternoon, another historian came to work on a similar box. He read barely a word. Instead, he picked out bundles of letters and, in a move that sent my sinuses into shock, ran each letter beneath his nose and took a deep breath, at time almost inhaling the letter itself, but always getting a good dose of dust. Sometimes, after a particularly profound sniff, he would open the letter, glance at it briefly, make a note and move on.

Choking behind my mask, I asked him what he was doing. He was, he told me, a medical historian. . . . He was documenting outbreaks of cholera. When that disease occurred in a town in the eighteenth century, all letters from that town were disinfected with vinegar to prevent the disease from spreading. By sniffing for the faint traces of vinegar that survived 250 years and noting the date and source of the letters, he was able to chart the progress of cholera outbreaks.*1*

In a similar vein, Austin Henderson of Xerox/PARC said, “one of the most brilliant inventions of the paper bureaucracy was the idea of the margin” — the margin, by which metadata annotations can amplify the data in the text proper, thus making the text’s data more useful. The more data available relative to a bit of information, the more valuable that information becomes; the less metadata, the less valuable the information.*2*

At a point in my academic years when I was not yet equipped to know the difference between Rudolf Bultmann and Joe Shlabotnik, such pertinent metadata as the condition of the book, the stature of those who had taken it out before me, and the physical location of the book all provided me clues to help me weigh what I should think of the work in question. These useful tidbits of metadata were readily available in the pre-electronic library. In the up-to-date library, not only do I not know who has taken a book out before me, but it’s widely considered a matter of the borrower’s privacy rights that I not be permitted to know.*3*

Some sorts of metadata persist in the modern library — right here at the United Library, some books are catalogued in the online system, whereas others can be retrieved only through using the card catalogue — but other sorts of metadata have disappeared, impoverishing the repertoire of information on which researchers can draw. In the modern library, metadata begins to disappear into inaccessible reserves — but in the online library, metadata pulls an even more dramatic disappearing act. In online research, relatively few manifest signals of metadata appear to an untrained eye; instead of providing a helpful circumstantial clue to work’s standing to a beginning scholar, a researcher now needs expertise to get at the metadata. To a great extent, if you’re clever enough to get at the metadata, you’re clever enough to not need it.

This represents an acute turning point particularly for those institutions whose mission includes reaching out to learners whose main interest is less academic than pious and pastoral. Whereas a student in the 1980’s could count on abundant circumstantial data to help guide his research, in the 2000’s a student will find precious few breadcrumbs leading out of the forest. Yet more and more students rely more and more heavily on that [online] forest as a base for research. Just three years ago, at the previous Theology and Pedagogy in Cyberspace conference, I had occasion to sigh once or twice because I feared that my students would never get the hang of using the internet for research; now many of them are reluctant ever to look into the physical library collection.

Now, I’m not by any means the first one to notice this problem; numerous colleagues (Mark Goodacre, Felix Just, Torrey Seland , and Sheila McGinn) have devoted tremendous effort to compiling collections of more-or-less annotated links as a guide to their students, and I admire the diligence and charity that have gone into that work. The Wabash Center likewise has assembled a valuable guide to research on the internet. Pages of links offer researchers some of the metadata that online compendia strip away, and sometimes offer even more metadata.

The links page, however, entails certain definite pitfalls. The best of these provide annotations, but that’s hard and time-consuming, so useful annotations are uncommon. Moreover, several mechanical problems arise from links pages. First, they’re back-breakingly labor-intensive; only a researcher with good judgment and a troop of indentured student servants, or an independently wealthy researcher with no social life, can do a plausible job of keeping a links page quite up-to-date. Even then, new resources appear, contents of sites change, and the links page falls behind the inexorable forces of innovation and linkrot. Indeed, several of the above scholars reflected online about the burden of maintaining links pages, to which Seland (maintainer of the Philo of Alexandria links and the Resource Pages for Biblical Studies) observed, “I can foresee that it will be a more and more demanding task as a one-man work. . . The relevant material on the Internet is growing so rapidly, that it is hard to imagine what even the next year will bring.”*4* Without calling into question the generous and illuminating support of willing cataloguers and institutions, I suspect that links-pages will in a short while have to give way to the sheer brute accumulation of information.

Give way to what, however? Seland suggests developing specialized sites that concentrate on ever smaller bits of the online literature (thus replicating the specifically modern gesture of dividing up knowledge into smaller and smaller areas of authoritative expertise ? to which we might well apply Ray Ozzie’s cautionary words: “We should distrust any elaborately planned, centrally deployed, and carefully developed business system or process. Successful systems and processes will be agile and dynamically adaptive; they’ll grow and evolve as needed over time.”*5*). The response of gerneating ever-narrower links pages too, however, is fated to fall by the wayside as the avalanche of online information sweeps across the academic landscape. For example: in 2000, Ian Balfour reported on the explosion of information about Tertullian online.*6* He noted 2000 scholarly publications in the 500 years since the printing of Tertullian’s Apologeticum; since the inauguration of the Web, he counted 921 Tertullian-oriented sites online.This morning, Google found more than 104,000 sites with a connection to Tertullian. Even allowing for extensive duplication, we may guess that Dr. Balfour no longer has time to keep an up-to-date Tertullian bibliography.

The besetting problem with links pages, however, lies more deeply in the ways technology and knowledge work than in the mere practicality of maintaining such endeavors. A links page functions as a throttle to knowledge; even as it promotes awareness of responsible research on its topic, its job is to restrict the flow of attention. That runs diametrically against both the path of information technology and the course that human inquiry ought to take. Although knowledge constitutes much more than the mere accumulation of information, one essential ingredient in durable, flexible learning involves distinguishing sound from unsound information — a capacity that one can’t develop if one always encounters information that’s already been filtered. Further, if one never departs from the academic pastures that one’s mentors have fenced in, one will never experience the provocation and possible inspiration that come from an encounter with undomesticated thinkers.

Further, a filtered-links approach vests a problematic authority in the links-page maintainer. Even the fairest, most astute filterers will assess particular pages differently, and though they may agree on obviously reliable or unreliable sources, at the exact spot where they would be most useful — the soundness of sources whose brilliance or inanity isn’t obvious — they will diverge. And not every filterer shows the full degree of fairness and astuteness. Perhaps the links-page maintainer concerns herself primarily with the New Testament, but ventures out from that specialization to offer some pointers on patristics; should a researcher trust the patristic links as much? When a library establishes a links page to guide researchers, which librarian, with what degree of familiarity with the field, determines which pages should be linked and which should not? Is there metadata on the links page to indicate the degree of confidence with which the linker promulgates those links, and the degree of confidence that other scholars have in linker’s judgment? (Yes, there is, in a way; but we’ll get to that in a few minutes.)

Filtered links not only hobble our students’ intellectual growth — they also militate against the trajectory of technological development, which tends toward the proliferation of information and alternatives. Filtering tries to build a bulwark against information flow — but at the cost of denial, of inflexibility, and of an antithetical approach to technological possibilities. Rather than devoting our energies to holding back the flood of information, we and our students need to learn from the technology how best to navigate, to negotiate, to discern among the myriad alternatives for research.

What would be a more appropriate response to the dilemma of online research? In order to approach the problem of information abundance in a way accordant with the best characteristics of learning and of technology, our research practices should not constrict the scope of inquiry, but rather take advantage of the very plenitude of online information. Though individual pages may lack the familiar physical clues of metadata — the explicit clues to which we adapted under the conditions of research in physical libraries — the online reservoir has developed other, different metadata that can offer us different clues toward more refined research, if we look for the clues indigenous to online communication rather than bemoan the transition from the good old days.

The first generation of web search engines, for example, relied on the the content of web pages for cataloguing and retrieving information in response to searches. We were taught, once upon a time, to put relevant keywords into tags specifically named for metadata, so that search engines might more rapidly find our pages even when we don’t specifically mention those keywords. That didn’t last long; in relatively short order, the attention merchants realized that by pumping every possible word into their metadata, they could thrust themselves before the gaze of reluctant researchers. Moreover, the search results weren’t ordered by a particularly useful principle; as John Milton said of patristic exegesis, so might we say of search engine results, that “Whatsoever time or the heedless hand of blind chance hath drawn from of old to this present in her huge Dragnet, whether Fish or Seaweed, Shells or Shrubbs, unpicked, unchosen, those are the Fathers” (or, “early search engine results”).*7*

Enter the second generation of search engines, what we may call the Google era. What Sergei Brin and Larry Page realized was that they could produce more useful search results if they didn’t simply spider the contents of web pages, but analyzed the relative prominence of the pages they were spidering. They devised an algorithm that generated a recursive measure of the importance of each page based on the number of other pages that linked to it. The more pages that link to your page, the higher your page will appear in the list of results for a given search term.

All that Google does is contextualize the content of your page with the metadata of how many other pages think that your page is worth attention. They take pains to emphasize that theirs is a mathematical ranking, so that when undesirable outcomes arise — as when an anti-Semitic site wrests its way to the top spot in for the search term “Jew” — they refuse to jigger the rankings. On their account, if they tailor the results for one search, they’ll open the door to endless fiddling to promote one site or demote another.*8*

Google’s refinement of search-engine engineering makes all the difference in the world. Whereas once searches returned results based on how early in the document your search term appeared, or what percent of the content your search constituted, or some other characteristic, now searches return results that usually show the pages other users have identified as worth attention — all on the basis of existing metadata. Unfortunately, the democratic character of Google search results somewhat vitiates Google’s value as a research tool; for my students’ research purposes, one link from N. T. Wright or Dominic Crossan should outweigh hundreds of votes from St. Wilgefortis Episcopal Church Bible Study Group. Google improves the researcher’s odds, but still leaves much to be desired as a do-it-all tool.

Google itself has recognized that its one-size-fits-all approach doesn’t suit all web searches equally, and its advertisers have realized that their ad dollars would be targeted even more precisely. Google has recently begun offering a personalized web search service, with which a user can select particular topic areas of interest, which subsequent Google searches then emphasize (“religion,” “sports,” “American Literature,” and so on). The search results still follow Google’s PageRank algorithm, but the engine shifts the weights to emphasize religiously-oriented sites. You can see right away a number of weaknesses to this plan: Who decides what counts as a “religious” site? How much emphasis should the search engine assign to which sites? What if a religiously-active baseball-loving American Lit professor just wants to find information about Golden Retrievers? But the premise is on target. Google’s personalized searches move beyond a model wherein all searches are the same, to a model where different searches anticipate different kinds of results.*9*

Now, let’s imagine a function that doesn’t exist yet. Let’s imagine that before you run a search, you could stipulate one or more URIs that should serve as touchstones for weighting subsequent links. The further a result is from one of these touchstone sites, the lower the search engine will rank that site. If the touchstone site(s) link directly to a relevant site, that site would appear first in the results; if a relevant site had only a tenuous connection to the touchstone site, it would appear late in the results.

With this sort of seeded search, I could enter the URIs for Mark Goodacre, Jim Davila, and Sheila McGinn (for instance) as the touchstones — and then search for terms pertinent to the Gospel of Mark, confident that sites that my reference authorities deem worthy of attention would rise to the surface. Such a facility — though as I say, it is not yet available — would not be difficult to construct; the idea has generated positive reception online, and has been the subject of several conversations with developers at Technorati, the web search engine that focuses on volatile information (news pages, weblogs, and so on). Seeded-search capability would lend a clear focus to the weighting that Google’s personalized searches try to do with vague topical headings, and would add a great deal of value to the search results. While an academic searcher might want to check results against leading scholars in her field, a more casual searcher might want to find out what films Roger Ebert, Elvis Mitchell, and Kenneth Turan all agree are good.

That possibility, in turn, points to another weakness in the Google algorithm — that all links count as positive indicators in Google’s system. There’s no way to distinguish my linking (for ease of reference) to an essay I think gravely misguided, from my linking (as an encouragement to visit the site) to a page I think illuminating. To that end, Technorati’s Director of Engineering Kevin Marks has proposed including in anchor links a simple rel attribute value stating “vote-for” or “vote-against,” to indicate approval or disapproval of the site linked to.*10* Search engines could then readily weight their results to reflect the difference between a site that many people disdain, a site that many applaud, and a site that’s generating a lot of discussion pro- and con. Since the rel attribute already exists (though it’s underused), such a change would not require action by a standards bureau; you could begin writing vote links into your HTML tonight.

By combining Vote Links with seeded search, a third generation of search engine usefulness could leapfrog beyond Google’s bare link-counting. Such an implementation of searching would serve many of the purposes of filtered links pages; it would amplify the likelihood that a researcher would find a positively-regarded source, without requiring that anyone maintain a vast (growing, aging) links hierarchy. It would flex depending on the seed sites named in the initial search, so that various theological and academic factions would not need to generate and maintain sites customized to their particular interests and commitments. The Vote Links-Seeded Search combination provides a technologically-appropriate response to the convergence of information avalanche and the loss of metadata.

But I’m not talking to you tonight to sell a particular plan for installing and extracting metadata. The point of describing this potential hack for improving search functionality lies not in seeking your endorsement, but in calling attention to the central issue for electronically-informed pedagogy — and that is, in the end, essentially the same issue as for print-based pedagogy, blackboard-based pedagogy (remembering that the blackboard constituted a revolutionary innovation in educational technology when introduced in 1809), and peripatetic philosophical conversation. One of the leading lessons in teaching technological learners is that people can be credulous and uncritical in any information medium. In person, in print, on TV, at movies, online, wherever, people do not attain critical judgment just from being exposed to more information, or to information in a different shape and texture. The best teachers, those who most truly teach, help students make their way through confusing thickets of unfamiliar information. We help students discover how to exercise the faculty of judgment, so that eventually they would ideally be able to reckon for themselves how sound a web page, an article, or the other side of a casual conversation might be.

We teachers have been wrestling with that daunting challenge from Socrates onward. The challenge changes somewhat when we pursue it in the context of digitally-mediated information, but if we communicate to our students a fixation on the peculiarity of digital mediation, we will almost certainly miss the many elements of critical assessment that depend less on how we encounter information than upon the questions we ask of it, the connections we make with it, the uses to which we put it. If we never sniff an envelope, we’ll never learn about the spread of cholera in colonial America; if we never examine the names of those who’ve borrowed library books before us, we won’t derive the benefit of a rough-and-ready system of faculty endorsement. And if we conduct research only by retrieving references off an approved list of possible sources, it will take us that much longer to learn what makes those sources more or less reliable than others.

Which is another reason that a more technologically-apt search process serves us all better than does a filtered links page. The blessing and the bane of Google is its open-ended quest for more; if we train learners to expect a limited range of acceptable sources, we re-impose an intellectual subordination from which higher education, we might hope, would deliver students. How will they handle their inquiry on the day our links page fails them, or inquiries that involve topics that our links don’t cover? The approach to searching that I commend tonight — or one like it — offers a degree of guidance to an inquiring learner, without foreclosing the range of possible answers.*11* So the pedagogy lesson that theological educators stand to learn from Google, the lesson that I propose tonight, is that we best serve the core obligation of our teaching by tackling head-on the challenge of cultivating our students’ critical sensibility in order to help them employ digital technology in their own learning as discriminating, critical users.

*1* John Seely Brown and Paul Duguid, The Social Life of Information (Cambridge, Mass.: (Harvard Business School Press, 2000), 173f.

*2* Quoted by Jon Udell in his column for InfoWorld, http://www.infoworld.com/article/04/04/09/15OPstrategic_1.html, visited April 15, 2004.

*3* This provides an accidental example of Lawrence Lessig?s point that changes in technology effect changes in the law, whether that change be deliberate or unintentional. Had the FBI wanted to know whether I was taking out terroristic books from the Yale Divinity Library, they would only have needed to go to a suspicious book in the stacks and look at its circulation card. Once that record becomes a matter of bits stored in an inaccessible database, however, my library borrowing pattern is no longer a public, but a private matter.

*4* http://philoblogger.blogspot.com/2004_01_01_philoblogger_archive.html#107398000949672067, last visited April 15, 2004.

*5* Ray Ozzie, from a pamphlet written in 2000, cited by Richard Eckel at http://www.groove.net/blog/?month=03&year=2004#3EB75869-B4E2-4EB4-B272-E17D3B3D6882.

*6* Ian L. S. Balfour, “Tertullian On and Off the Internet.” Journal of Early Christian Studies. 8:4 (2000): 579.

*7* Cited in F. W. Farrar, History of Interpretation (London: Macmillan, 1886).

*8* See http://www.jewishsf.com/content/2-0-/module/displaystory/story_id/21783/format/html/displaystory.html

*9* Actually, Google has already been doing this for a while. If you search for a phone number, Google will return the name and address for a listed number. If you search for a quantity in one unit of measurements and name another system of measurement (“15 miles in kilometers”), Google will calculate the quantity in the second units.

*10* See discussions at http://www.corante.com/many/archives/2004/02/14/vote_links.php, http://epeus.blogspot.com/2004_02_01_epeus_archive.html#107693111184685862, and

http://developers.technorati.com/wiki/VoteLinks.

*11* A theory that doesn’t appear on my links page will never come to a compliant student’s attention, after all, whereas a seeded search simply assigns that unwelcome result a place below more conventional citations.